Reinforcement Learning Part 6: Temporal Difference Learning

Following the previous part, we will continue exploring reinforcement learning with model-free algorithms. The previous parts can be found at the bottom of this article.

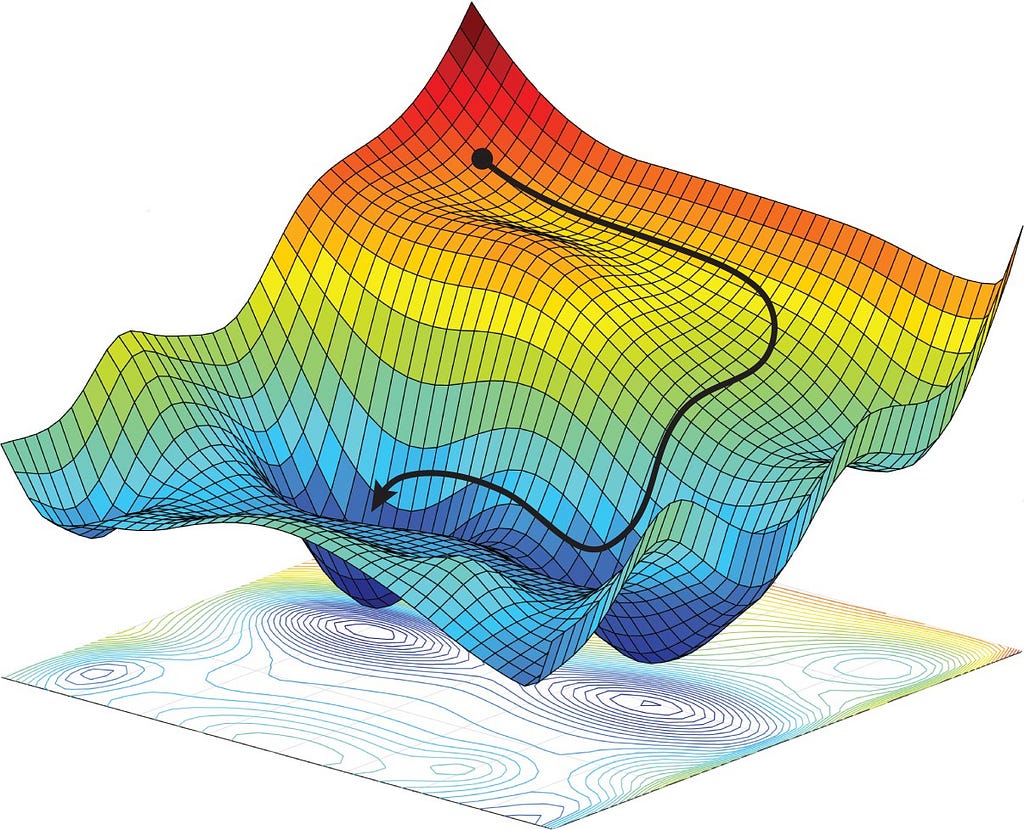

For all algorithms from now on, we will be using the Stochastic Gradient Descent (SGD) concepts. Since SGD is a familiar concept for most readers, I will be skipping over the proofs behind it. However, one should note that while we may say SGD multiple times in this and following articles, SGD may refer to any one of the following: Stochastic Gradient Descent (where a single sample is used at each iteration...

For all algorithms from now on, we will be using the Stochastic Gradient Descent (SGD) concepts. Since SGD is a familiar concept for most readers, I will be skipping over the proofs behind it. However, one should note that while we may say SGD multiple times in this and following articles, SGD may refer to any one of the following: Stochastic Gradient Descent (where a single sample is used at each iteration...